What is the best LLM for my use case?

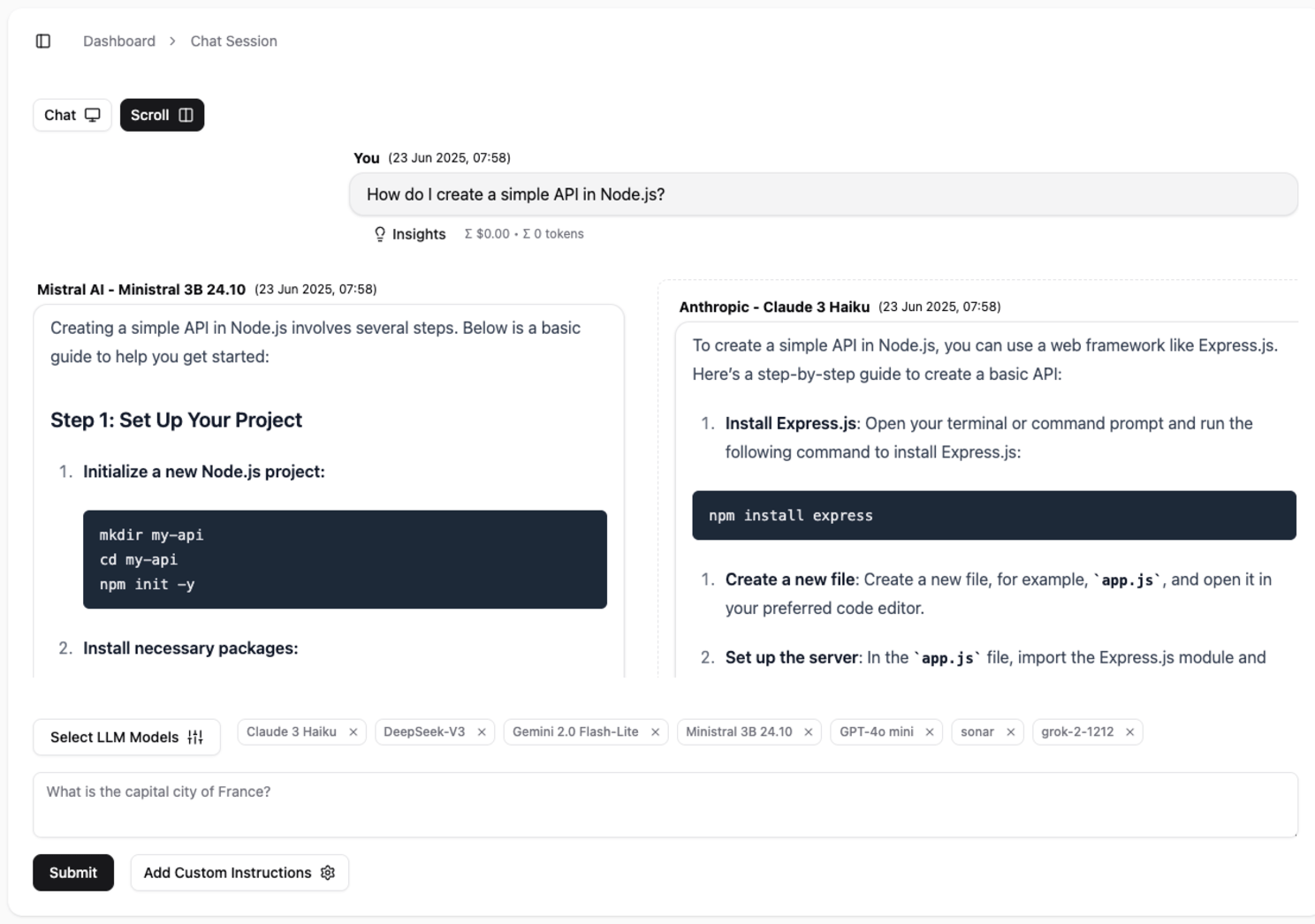

Building an AI feature or integrating an LLM into your app? Nomodo AI lets you compare dozens of top-tier language models side-by-side, so you can pick the right one for the job — no guesswork, no context switching.

What can you do with Nomodo’s multi-LLM chat?

- Pick from 30+ LLMs by 7 top providers: OpenAI, Mistral, Anthropic, xAI, Google, and Perplexity.

- One prompt → multiple responses. Choose the models you want to test and get answers from all of them at once.

- Instant response comparison. Review them side-by-side and pick the one that best fits your use case.

- See real-time metadata for every response — including latency, cost, and token usage (input/output).

Suggested Use Cases – Try These Inside Nomodo

-

hoosing the Right Model for Your Application

Building a chatbot, summarizer, or custom Q&A tool? Write your production prompt, select 5–10 models (like GPT-4, Claude, Mistral), and run them in parallel. Compare outputs side-by-side to see which model best matches your tone, accuracy, or reasoning needs. -

Tuning Your Prompt for Reliability Across Models

Need your prompt to work across providers? Make small adjustments to your prompt and test across models in real time. This helps you identify which models are sensitive to phrasing and which ones are more consistent or robust. -

Testing an MVP Without Any Setup

Want to validate a product idea without managing API keys or integrations? Paste your core prompt into Nomodo, select a range of models, and get immediate feedback. No backend setup required. -

Cost/Performance Optimization

Trying to balance quality and cost? Compare models like GPT-3.5 or Claude Instant with higher-end options like GPT-4 or Claude 3 Opus. Check metadata for token usage, latency, and price per request to decide which offers the best value for your use case. -

Debugging a Failing Prompt

If a prompt that used to work starts producing strange outputs, test it across multiple models in Nomodo. If the issue appears only in one model, it’s likely provider-specific. If it fails across the board, the prompt may need reworking.