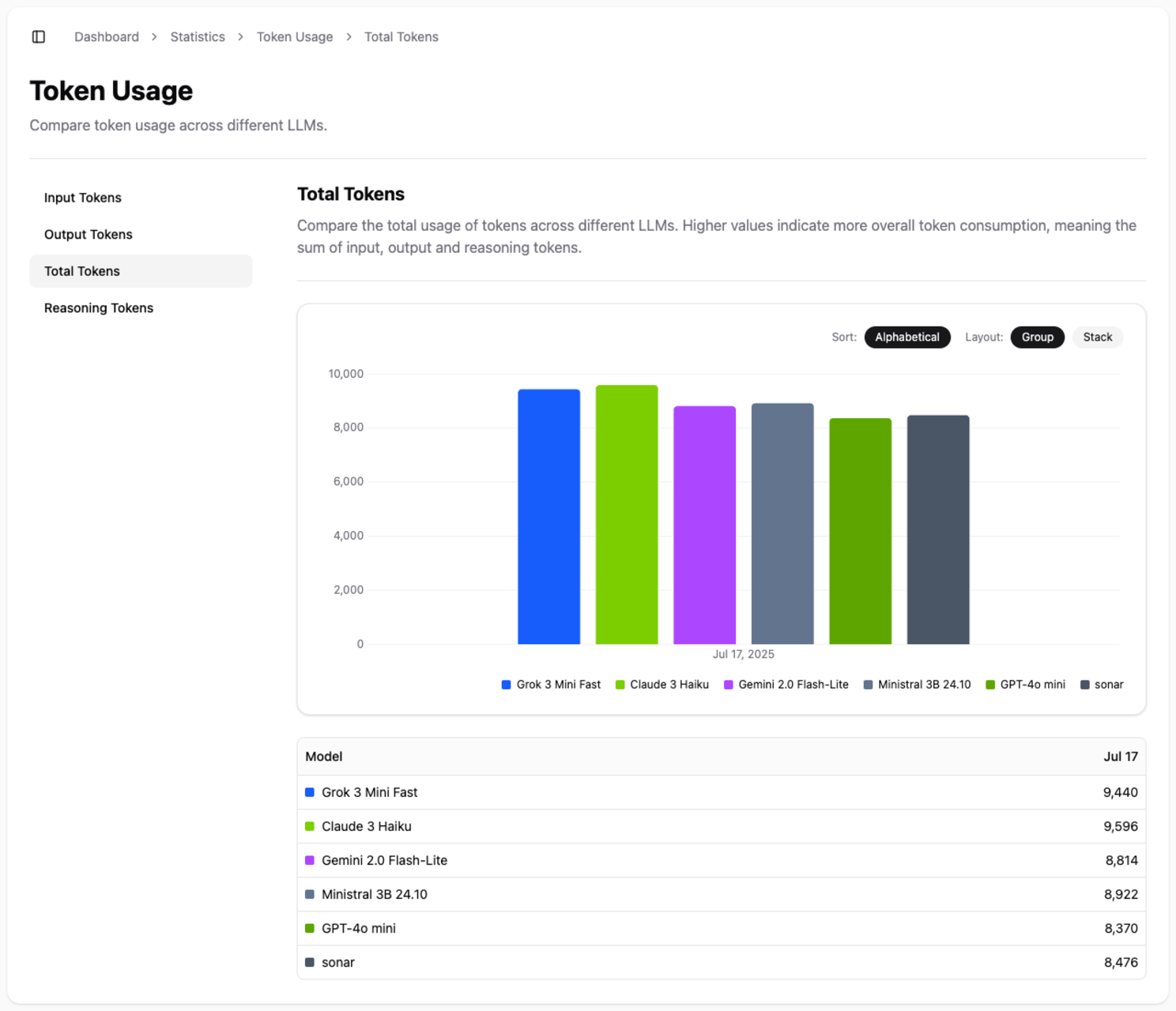

In the world of LLMs, tokens are currency. Understanding how you're spending them is key to building efficient and cost-effective applications. The Token Usage dashboard gives you a precise breakdown of your consumption patterns.

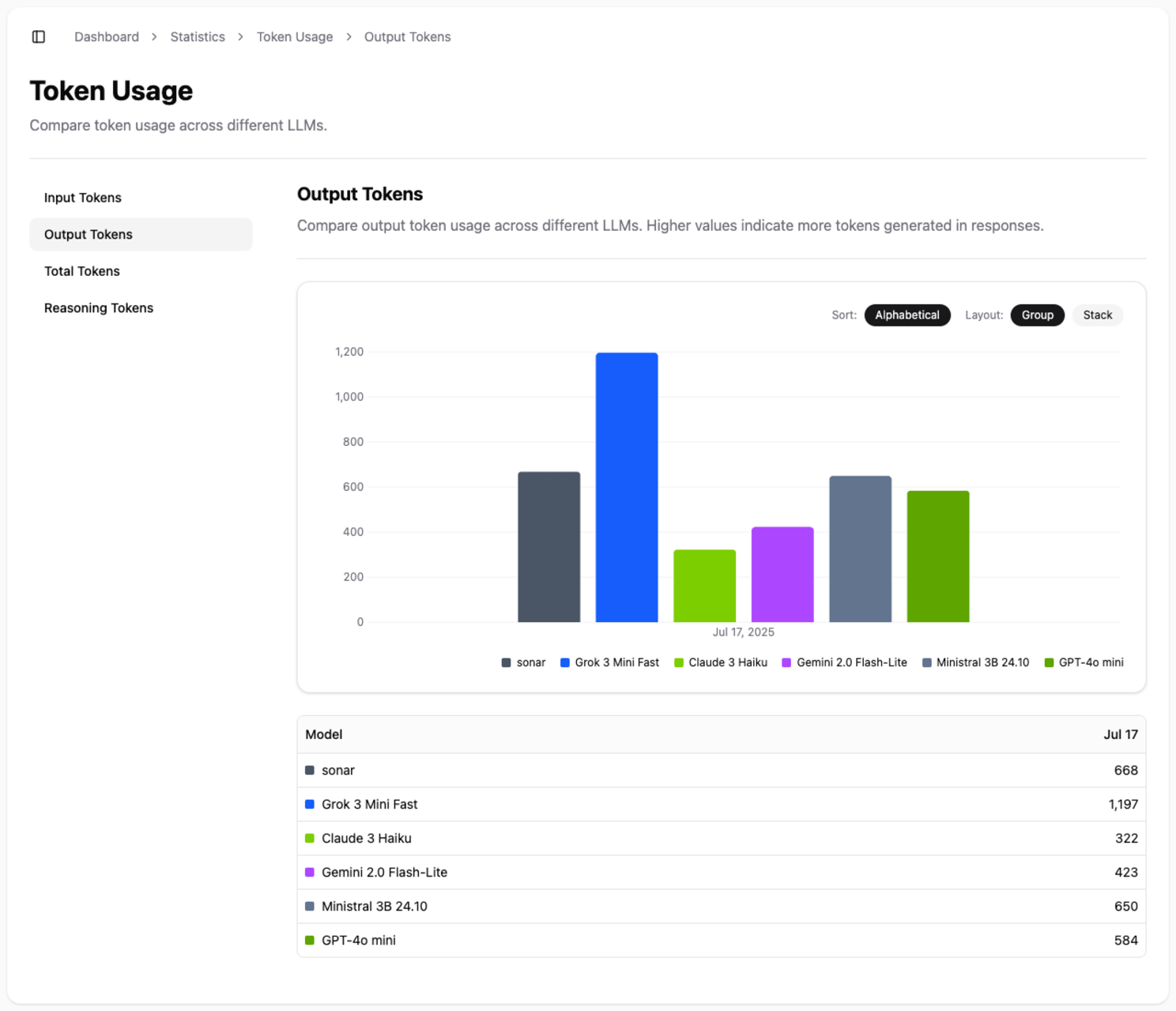

Here you can compare how different models consume tokens for the same tasks. See which models require more input tokens to understand a prompt and which generate more output tokens in their response.

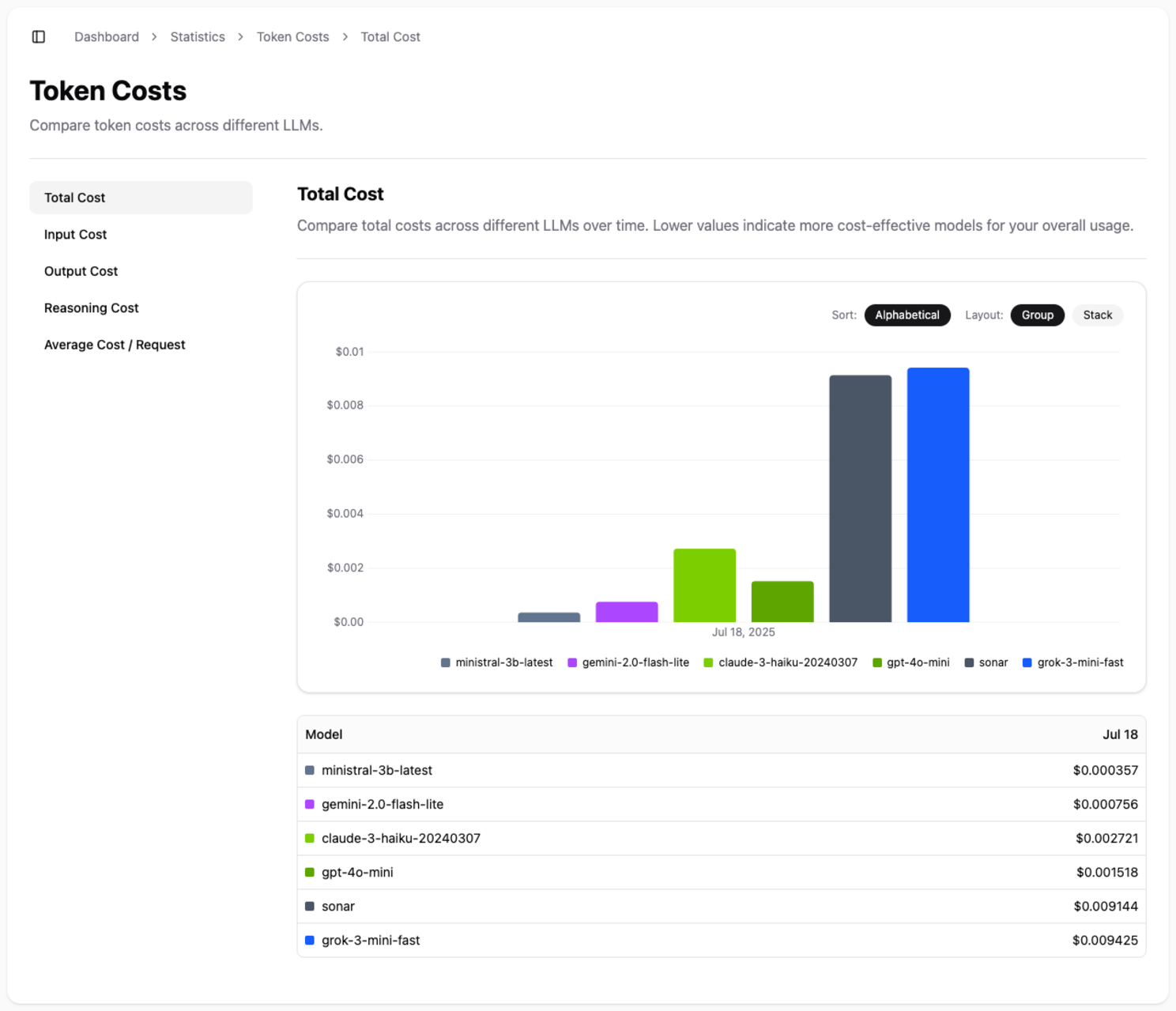

By analysing these patterns, you can refine your prompts to be more concise and select models that provide the right balance of detail and brevity, directly impacting your overall costs.